Daichi Tajima, Yoshihiro Kanamori, Yuki Endo

University of Tsukuba

Eurographics 2025

Daichi Tajima, Yoshihiro Kanamori, Yuki Endo

University of Tsukuba

Eurographics 2025

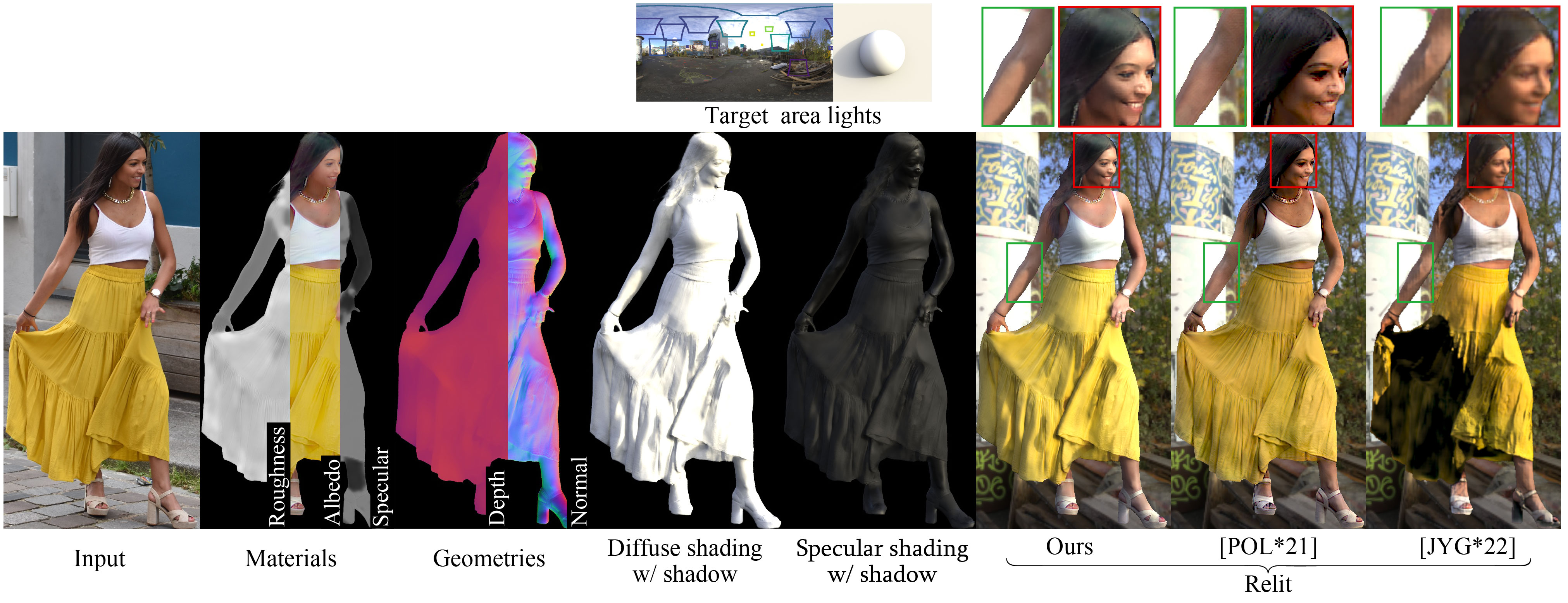

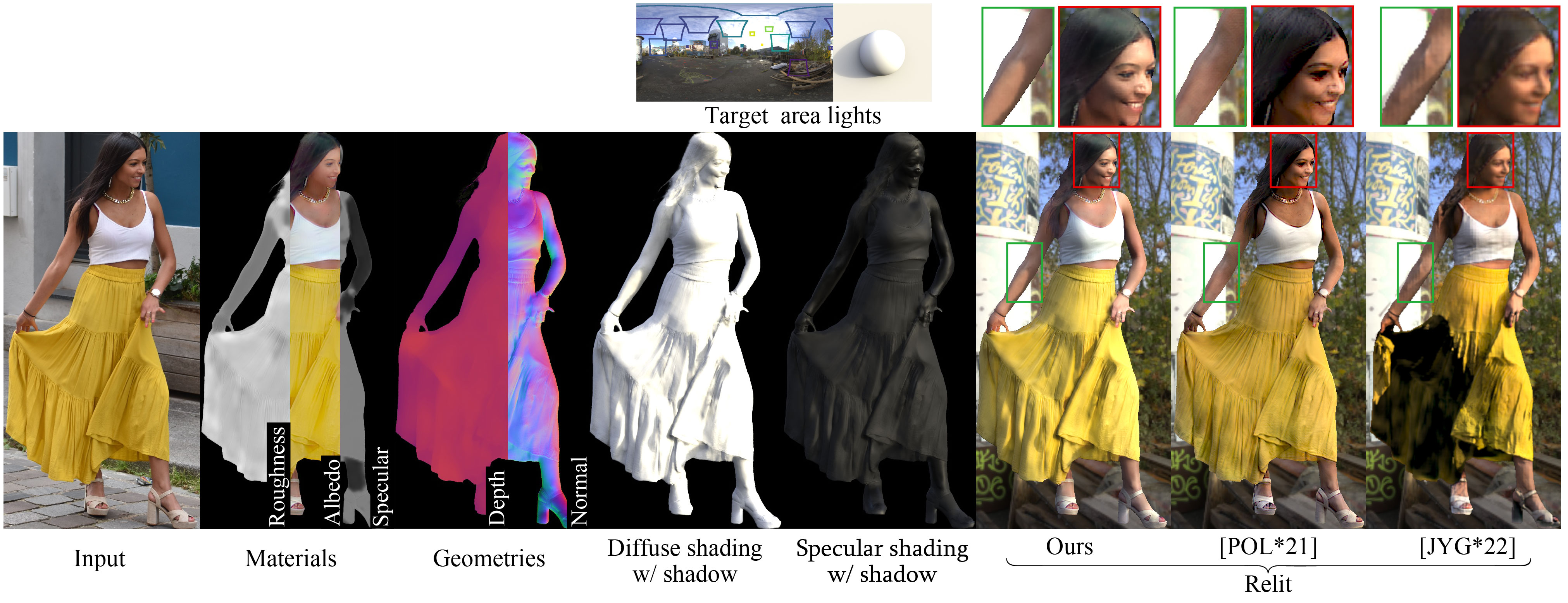

Relighting of human images enables post-photography editing of lighting effects in portraits. The current mainstream approach uses neural networks to approximate lighting effects without explicitly accounting for the principle of physical shading. As a result, it often has difficulty representing high-frequency shadows and shading. In this paper, we propose a two-stage relighting method that can reproduce physically-based shadows and shading from low to high frequencies. The key idea is to approximate an environment light source with a set of a fixed number of area light sources. The first stage employs supervised inverse rendering from a single image using neural networks and calculates physically-based shading. The second stage then calculates shadow for each area light and sums up to render the final image. We propose to make soft shadow mapping differentiable for the area-light approximation of environment lighting. We demonstrate that our method can plausibly reproduce all-frequency shadows and shading caused by environment illumination, which have been difficult to reproduce using existing methods.

Keywords: Image manipulation; Rendering

@article{tajimaEG25,

author = {Daichi Tajima and Yoshihiro Kanamori and Yuki Endo},

title = {All-frequency Full-body Human Image Relighting},

journal = {Computer Graphics Forum (Proc. of Eurographics 2025)},

volume = {},

number = {},

pages = {--},

year = {2025}

}Last modified: Apr. 2025

[back]